Just as having the right web analytics data is critical to making smart marketing decisions, having the right set of tools is equally imperative when it comes to testing & tuning your Google Analytics implementation. Read on to discover the tools used by one Analytics Pro in troubleshooting and solving Google Analytics problems every day.

Why you need tools and what you can use them for

Implementing Google Analytics can be easy - just copy and paste the script produced during the account or profile creation process, right? Yes, and no. For more complex websites, it's a good idea to take some extra steps yourself, or

hire someone, to validate your installation and make sure everything's working as it should.

When problems arise they are usually easy to spot within Google Analytics reports. Odd data such as a high degree of "self-referrals" (visits being reported as "referred" from your own domain name), a strangely high rate of conversions for an unexpected traffic source or medium, or an amazingly low bounce rate (3.8% bounce rate isn't

really good, it's broken) are signs something may be wrong.

Enter the toolbox! In it you'll find an array resources for quickly identifying the root causes of Google Analytics anomalies - those most commonly being

- JavaScript errors,

- cookie problems, or

- client-side page load time issues (not to be confused with slow connections... this is different).

Tools every Google Analytics professional should have

1) The Browser to Start with: Firefox

The

Firefox browser is probably the most important tool for technical debugging work with Google Analytics. The browser itself isn't what matters so much as the myriads of

add-ons that are available for it. To get started on building your toolbox, get Firefox if you don't have it already (and don't worry, there are some tools for Internet Explorer too!).

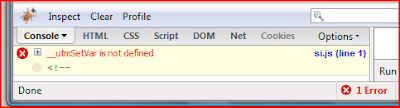

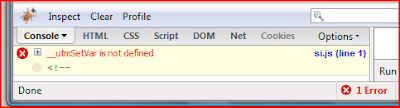

2) Working with JavaScript: Firebug for Firefox

This is where the march of add-ons for Firefox begins. The first and probably most important tool in the box is

Firebug, an add-on for Firefox. Use the following Firebug features when debugging Google Analytics implementations:

- Detecting JavaScript errors quickly and easily - identify the script and line of code within the script that is the culprit

- Testing JavaScript code within the browser environment without having to edit an actual page on the server using the script console window in Firebug

Firebug can do

much more than just detect script errors and help you rapidly test JavaScript, but these applications are particularly useful for Google Analytics technical work, especially when used in conjunction with additional tools detailed below.

3) Working with Cookies: Web Developer Toolbar in Firefox

The

Web Developer Toolbar is most useful for Cookie analysis and diagnosis when working with Google Analytics. It is much faster to use when needing to view just what cookies have are currently set for a given page you are viewing. You can easily see key information for each cookie, find the "utm" cookies, and view details such as the domain the cookies were written for and what the values are.

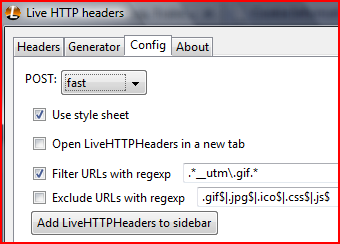

4) Tracking the Data Stream: Live HTTP headers

Debugging JavaScript and cookies is where troubleshooting begins. Once you are confident the scripts are working properly and cookies are appropriately set, the reporting mechanism for Google Analytics, the utm.gif tracking hit, must still take place in order for data to be reported into your Google Analytics account.

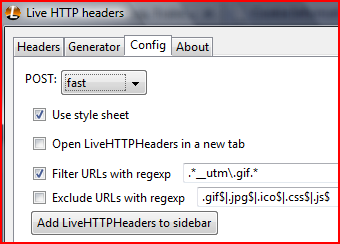

Live HTTP headers is a tool of choice for identifying when these utm.gif tracking hits take place.

Bonus configuration option for Live Headers: under the "config" tab enter ".*__utm\.gif.*" (without the quotes) into the "Filter URLs with regexp" field, and make sure the field is checked. This will limit the Live Headers window to only show utm.gif hits, otherwise finding one or two utm.gif hits amidst all the other requests that will fly by may feel like the proverbial search for a needle in the haystack

5) Page Execution Speed: Chrome JavaScript Console

The JavaScript Console in

Google's new Chrome Browser is perfect for detecting potential issues on sites that have a lot of other JavaScript running or have the Google Analytics tags placed on the page in a manner that other elements may slow down the code from running. The JavaScript console "resources" pane shows the number of seconds it takes for the Google Analytics script to be loaded and the utm.gif tracking hit to run.Consider this example: it took 6.58 seconds from when the browser began loading this page to when the ga.js file was loaded - and it took even more time before the utm.gif hit was fired! How many people leave before 6.58+ seconds? We will never know because of a latency issue on this page.

Tip: using this tool, if you detect a latency problem, consider optimizing the other JavaScript running on your site, optimizing image files, or placing the Google Analytics code higher in the page so that it does not have to wait for everything else to complete before it runs (note that placing the code in the of the page can bring some additional dependencies with it, so consider seeking the counsel of an experienced

Google Analytics professional if considering this change).

Tools for Internet Explorer

While many will argue that Firefox or Chrome is a "better browser," we must face the reality that, for now at least, Internet Explorer sill leads the global market in browser use. Thus, if you do all your debugging in Firefox or Chrome, you may easily miss problems that would arise for Internet Explorer users. Or perhaps you're already aware of such problems and need to diagnose them further. Here are a few tools that are available for IE.

6) JavaScript Debugging in Internet Explorer: DebugBar

DebugBar is sort of like an Internet Explorer hybrid incarnation of the Web Developer Toolbar and Firebug add-ons for Firefox. Using this tool you can track down JavaScript errors in Internet Explorer in the same way Firebug works, plus some advantages. You really have to check it out to get a feel for all the features. Bottom-line: use this tool for analysis of JavaScript errors you suspect are holding up accurate Google Analytics reporting.

7) Live Data Stream Analysis in Internet Explorer: Fiddler2

Fiddler is like Live HTTP Headers, except that it is a standalone application that can detect HTTP traffic between any application your computer and outside web servers. This makes it more accurate than Live Headers in Firefox. It can be used with Internet Explorer, but also other browsers, including Firefox. The tools for analyzing captured requests, utm.gif hits included, are superior to Live HTTP Headers in many ways.

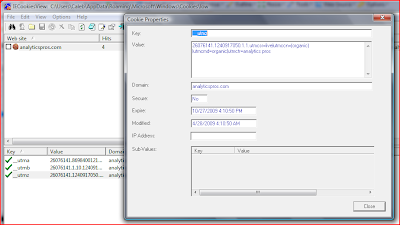

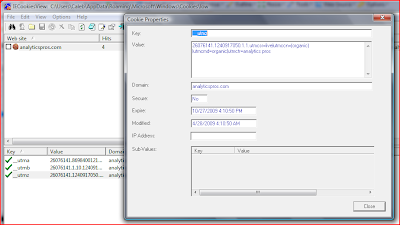

8) Cookies in Internet Explorer: IE Cookies Viewer

This small but powerful tool lets you easily find, view, and even modify cookies for Internet Explorer. It is indispensable for Google Analytics diagnostic and troubleshooting work when encountering cookie domain issues.

In Conclusion

So, there you have it: a plethora of tools that are tried and true means to the trouble-free Google Analytics end you're seeking. Here's a recap shortlist of the tools:

Posted by Caleb Whitmore of

Analytics Pros, a

Google Analytics Authorized Consultant